1、主要内容

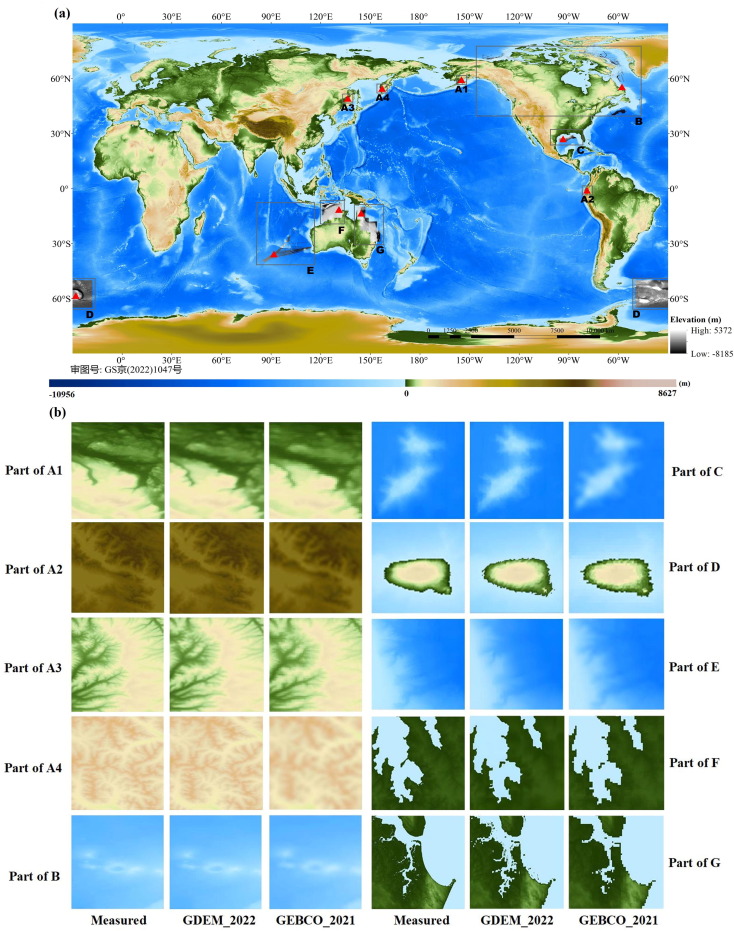

2020年,NASADEM和FABDEM免费提供世界陆地30米分辨率的DEM。获取测深数据,融合不同分辨率和传感器的水深数据极具挑战性。本文使用超分辨率(SR)的深度学习技术识别和补偿分辨率和传感器之间的差异,融合30米分辨率的NASADEM、GEBCO_2021数据和众多高分辨率(HR)区域海洋DEM数据集,生成分辨率为3角秒的全球DEM数据集。

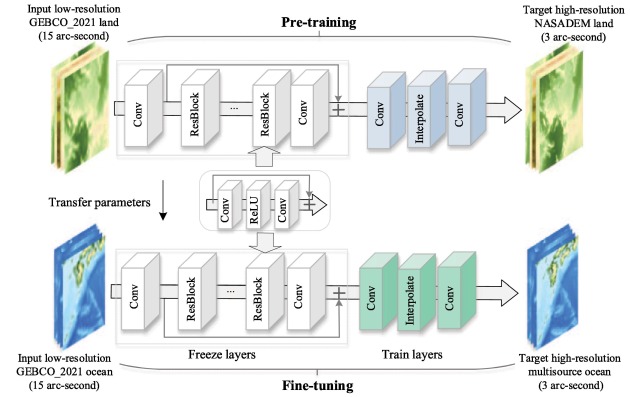

如果使用陆地样本集来训练深度学习网络模型,则得到的模型仅适用于陆地领域。有证据表明陆地和海洋的地形非常相似。板块运动导致海洋和陆地持续变化。迁移学习(transfer learning)使用从源领域获取的知识解决目标领域新的相关问题。因此,这项研究利用陆地和海洋的相似性来弥补测深数据的相对不足。

NASADEM是一个分辨率为1角秒(30米)的数据集,覆盖了北纬60度至南纬56度之间80%的地球领土,没有测深数据

GEBCO_2021网格在15角秒网格上提供全球覆盖,具有43,200行和86,400列,总共3,732,480,000个数据点。

与陆地区域的DEM数据集相比,海洋区域的分辨率仍然很低。本文的主要贡献是利用GEBCO_2021和NASADEM陆地样本对神经网络模型进行预训练,然后利用有限的区域海洋DEM数据进行附加微调。

陆地HR DEM数据集是通过使用双三次插值法对1角秒的NASADEM重采样形成的。选择TanDEM-X 3弧秒数据测试陆地区域验证模型的准确性。

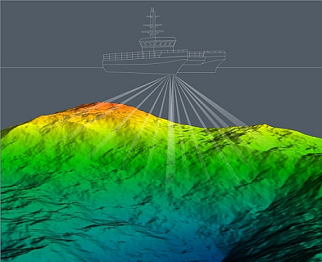

重采样的单波束、多波束、海图数据和卫星反演技术获得的其他HR区域海洋DEM数据集用于迁移学习和测试。

所有DEM数据集采用WGS84坐标系和EGM96大地水准面,灰度值被归一化为0到1之间的值。

为了生成训练集和验证集,对数据集执行了随机80/20拆分,该数据集包含大约579,900个全球分布的陆地样本单元。用于微调的迁移学习和测试数据集包含大约28 500个海洋样本,这些样本均匀分布在世界各海洋区域。在预处理阶段,使用ArcGIS 10.3和Python GDAL对整个数据集进行预处理。HR NASADEM和区域海洋DEM被分割成160×160像素的小块,而LR(低分辨率)GEBCO_2021被分割成32×32像素的小块。修补前,缺失的DEM值已从数据集中移除。

本文提出了一种叫做DEM-SRNet深度残差网络用于预处理DEM数据。相对于双三次(传统)插值,均方根误差(RMSE)平均提高了23.75%。陆地试验区域表明,DEMSRNet模型在RMSE方面优于现有的超分辨率(SR)模型。

超分辨率是通过软件或硬件方法,提高图像分辨率的一种方法。它的核心思想,就是用时间带宽换取空间分辨率。简单来讲,就是在我无法得到一张超高分辨率的图像时,我可以多拍几张图像,然后将这一系列低分辨率的图像组成一张高分辨的图像。这个过程叫超分辨率重建。

GDEM 2022和高分辨率(HR)区域海洋DEM数据集之间的平均绝对垂直误差达到19.58米,超过63.99%的绝对误差小于10米。在清晰度和细节方面,GDEM 2022优于GEBCO 2021。未来的努力将集中于增强SR模型从低分辨率输入图像中识别和消除系统误差信息的能力。样本不足仍然是我们目前工作的局限。获取更多的HR海洋样本数据集以提高模型的概化能力和准确性是未来需要解决的重要课题。我们预计,GDEM 2022数据集和DEM-SRNet模型(用于从世界各地获取HR测深数据)将扩大DEM数据社区,并激励其他研究小组取得更好的成果。

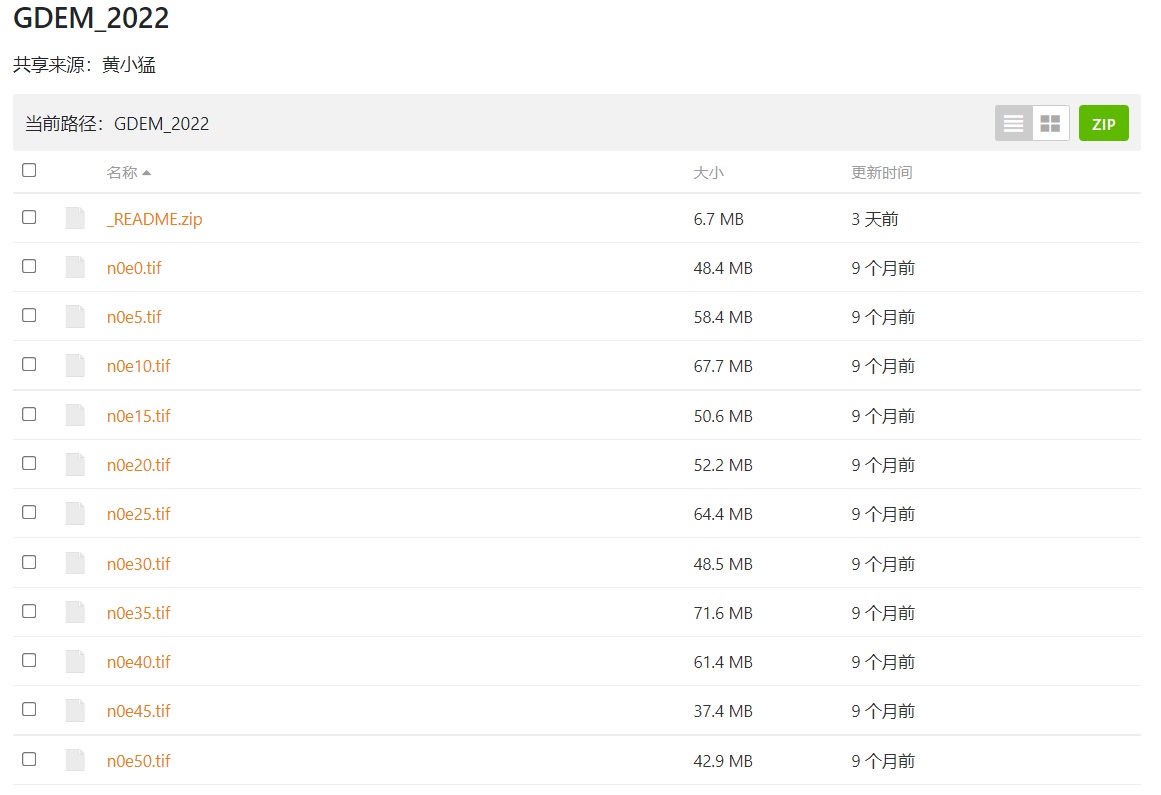

2、下载

GDEM2022数据下载地址:https://cloud.tsinghua.edu.cn/d/695ed43696564904980f/?p=%2F&mode=list

根据自己所需数据的范围,下载对应的tiff文件即可。

如果想要下载一定范围的tiff数据,比如15-35N,30-45E,可以通过如下代码:

#!/bin/bash

echo '#!/bin/bash' >dload.sh

for((i=15;i<=35;i+=5))

do

for((j=30;j<=45;j+=5))

do

f=$(curl -GILs https://cloud.tsinghua.edu.cn/d/695ed43696564904980f/files/?p=/n${i}e${j}.tif\&dl=1 | grep location )

curl -C - -O ${f/location:/}

echo curl -C - -O ${f/location:/} >> dload.sh

done

for((j=5;j<=180;j+=5))

do

f=$(curl -GILs https://cloud.tsinghua.edu.cn/d/695ed43696564904980f/files/?p=/n${i}w${j}.tif\&dl=1 | grep location )

echo curl -C - -O ${f/location:/}

echo curl -C - -O ${f/location:/} >> dload.sh

done

done

for((i=5;i<=85;i+=5))

do

for((j=0;j<=175;j+=5))

do

f=$(curl -GILs https://cloud.tsinghua.edu.cn/d/695ed43696564904980f/files/?p=/s${i}e${j}.tif\&dl=1 | grep location )

echo curl -C - -O ${f/location:/}

echo curl -C - -O ${f/location:/} >> dload.sh

done

for((j=5;j<=180;j+=5))

do

f=$(curl -GILs https://cloud.tsinghua.edu.cn/d/695ed43696564904980f/files/?p=/s${i}w${j}.tif\&dl=1 | grep location )

echo curl -C - -O ${f/location:/}

echo curl -C - -O ${f/location:/} >> dload.sh

done

done

将上面的代码复制保存在cmd.sh,然后在msys2的窗口中输入bash cmd.sh,下载的tiff文件就在cmd.sh所在目录下。

3、小结

本文提供的GDEM2022是博主第一次见到中国学者公开发布的全球成果数据。我们必须给本文的作者们点一个大大的赞。GEBCO大洋数据大部分采用卫星重力反演出来的,在其使用过程中,我们已经注意到它的一些细节方面的缺陷。幸运的是,本文采用先进的与现在的大火的chatGPT一脉相承的迁移学习(Transfer Learning)技术,在细节和清晰度方面优化了GEBCO。这真是一件对海洋从业者来说功德无量的事情。

参考文献:

https://blog.csdn.net/giselite/article/details/128873456

https://www.sciencedirect.com/science/article/pii/S2095927322005412

https://blog.csdn.net/giselite/article/details/128873456

英文原文

Obtaining multi-source satellite geodesy and ocean observationdata to map the high-resolution global digital elevation models(DEMs) is a formidable task at present. The key theoretical andtechnological obstacles to fine modeling must be overcome beforethe geographical distribution of ocean opography and plate movement patterns can be explored. Geodesy, oceanography, global climate change, seafloor plate tectonics, and national ocean interests will all benefit greatly from this data assistance. Finer than 100-m resolution global DEMs were not available until the 2020s, whenthe world’s 30-m resolution land NASADEM and FABDEM were made freely available [1]. Acquiring bathymetric data is far more challenging than collecting terrestrial surface data since sonarbased procedures are needed. Because of the large quantity of data,it takes more time and requires more computational resources to make global DEM maps with higher resolution [2]. These data fusions have various accuracy difficulties because of the different resolutions and sensors. A deep learning technique known as super-resolution (SR) has been used to identify and compensate for the differences between resolutions and sensors [3,4]. The 30-m resolution NASADEM, GEBCO_2021 data, and numerous highresolution (HR) regional ocean DEM datasets are all publicly available,making it feasible to generate a global DEM dataset with a 3 arc-second resolution.

A large number of training samples are needed to generate a robust deep learning network model. Although there is a large quantity of terrestrial data, if the model is trained using a terrestrial sample set, the resulting model is only applicable to the terrestrial domain. There is evidence that the topography of the land and oceans is very similar. Plate movements cause the oceans and land to evolve constantly [5]. Transfer learning can use learned knowledge from a source domain to solve a new but related problem in a target domain [6]. As a result, this study leverages the similarities between land and ocean to compensate for a relative lack of bathymetric data. NASADEM is a data collection with a resolution of 1 arc-second (30 m) that covers 80% of the Earth’s territory between 60N and 56S latitude without bathymetry data. It improved phase unfolding to reduce data voids, facilitating greater completeness as well as providing additional SRTM radar-related data products [7]. The GEBCO_2021 Grid provides global coverage on a 15 arc-second grid with 43,200 rows and 86,400 columns for a total of 3,732,480,000 data points [8]. When compared to land regional DEM datasets, the resolution of ocean regions remains poor, and enormous areas of the ocean are sampled fairly coarsely[3]. The main contribution of this paper is pre-training the neural network model with GEBCO_2021 and NASADEM land samples,followed by additional fine-tuning with limited regional ocean DEM data. The HR DEM dataset corresponding to the GEBCO_2021 land area was formed by downsampling the 1 arc-second NASADEMwith the bicubic interpolation method. The TanDEM-X 3 arc-second data was obtained by TerraSAR-X’s ‘‘twin” satellite using synthetic aperture radars (SAR) interferometer technology.Certain terrestrial area investigations have shown its higher accuracy than other global DEMs [9]. However, in comparison to NASADEM,no gap filling or interpolation was applied to this data, and thus invalid data exists [10]. TanDEM-X 3 arc-second data (A1–A4 in Fig. 1a) were chosen for testing terrestrial regions to better validate the accuracy of the model. Other HR regional ocean DEM datasets, obtained by single-beam, multi-beam, chart data, andsatellite inversion technologies, were downsampled for transfer learning and testing (B–G in Fig. 1a). The acquisition period, sensor type, post-processing, and geographical extent vary between these ocean DEM datasets (listed in Table S1 online). The reference systems used in these DEM datasets varied. Some were gathered with the support of EGM96 geoid orthogonal heights. To ensure consistency,each dataset was converted to the WGS84 coordinate system before subtracting the difference between the local geoid and the EGM96 geoid from the reference DEM values. All DEM datasets were finally converted to the EGM96 geoid. The grayscale values of the DEM were normalized to values between 0 and 1 to accelerate model convergence.

To generate the training and validation sets in this study, a random 80/20 split was performed on the dataset, which contained approximately 579,900 globally distributed land sample units.The transfer learning and testing dataset for fine-tuning contained approximately 28,500 ocean samples that were evenly distributed across the oceanic regions of the world. During the preprocessing phase, ArcGIS 10.3 and Python GDAL were used to preprocess theentire dataset. HR NASADEM and regional ocean DEMs were split into 160 160 pixel patches, while LR (low-resolution) GEBCO_2021 was split into 32 32 pixel patches. Missing DEM values were removed from the dataset prior to patching.

A deep residual network called DEM-SRNet is proposed to pretrain the terrestrial DEM data. As illustrated in Fig. 2, the designed pre-training structure is derived from the enhanced deep superresolutionnetwork (EDSR) [11]. The first convolutional layer of the pre-trained DEM-SRNet network extracts a collection of features.The EDSR models default number of residual blocks was expanded to 32 [5]. Through experimental comparison, the optimalnumber of residual blocks (ResBlocks) is 42, each of which is composed of two convolutional layers that are interpolated with a ReLU activation function and finally, followed by a convolutional layer and an element-wise addition layer (+symbol in Fig. 2). This latter includes a convolution layer for extracting feature maps; an interpolating layer with a scale factor of 5 is utilized for upsampling from an input low resolution of 15 arc-second data to a target high resolution of 3 arc-second data; and finally, the convolutional layer aggregates the feature maps in the low-resolution space and generates the SR output data. With the interpolation function layer, low-resolution data can be super-resolved without checkerboard artifacts, as opposed to typical deconvolutional layers [12].When there are a large number of feature maps, the training process becomes unstable. Setting the residual scaling [11] to 0.1solves the problem.

The network is made up of 88 convolutional layers, 43 elementwise addition layers, and one interpolating layer. The convolutional kernel size in each convolutional layer is set to 3, and the padding is set to 1. There are 50,734,080 network parameters in total. The Adam optimizer with an initial learning rate of 0.0001 and the exponential decay method are used to train the model with the large dataset during the training phase. The early stopping technique with a patience of 6 is used to terminate training when the model’s performance begins to deteriorate in the validation set to prevent overfitting. The mini-batch gradient descent method typically requires 300 epochs to build a pre-training network from scratch. The initial parameters are derived from the terrestrial data pre-training network. The freeze layers of the pre-training network are employed in conjunction with the limited HR ocean DEMs to fine-tune the global DEM-SR model. As fine-tuning samples are limited, the learning rate has a significant impact on the convergence process. The learning rate is then adjusted down to 0.00001.

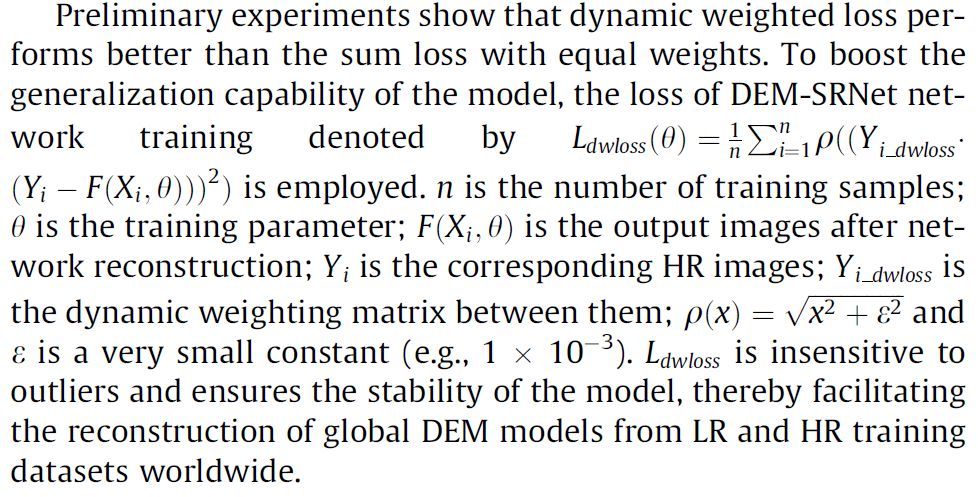

Preliminary experiments show that dynamic weighted loss performs better than the sum loss with equal weights. To boost the generalization capability of the model, the loss of DEM-SRNet network training denoted by LdwlossðhÞ ¼ 1n Pni¼1qððYi dwlossðYi FðXi; hÞÞÞ2Þ is employed. n is the number of training samples;h is the training parameter; FðXi; hÞ is the output images after network reconstruction; Yi is the corresponding HR images; Yi dwloss is the dynamic weighting matrix between them; qðxÞ ¼ ffixffiffi2ffiffiffiþffiffiffiffiffieffiffi2ffiffip and e is a very small constant (e.g., 1 103). Ldwloss is insensitive to outliers and ensures the stability of the model, thereby facilitating the reconstruction of global DEM models from LR and HR training datasets worldwide.

The global DEM super-resolution, which ultimately reconstructs 90 m (3 arc-second) resolution global raster images,requires the computational generation of approximately 93.3 billion pixels for the elevation value. There are 64 GB of training sample data as model inputs. This study employs Huawei’s Ascend 910 AI (artificial intelligent), which includes two Ascend 910 CPUs with 48 cores and 512 GB of RAM (random access memory). For model development, MindSpore employs the open-source Python programming language environment [13], the hybrid training mode of host and accelerator for distributed training, and the MindRecord format for data processing and format conversion. ModelArts,an open cloud platform based on MindSpore that aims to improve overall AI development efficiency by providing ample computing and storage resources, is used for all experiments.

As a result, ‘‘GDEM_2022”, a 3 arc-second global DEM dataset,was developed. The GDEM_2022 ocean data was inherited from the 15 arc-second resolution ocean bathymetry map GEBCO_2021.GEBCO_2021 incorporates bathymetric data from various ocean topographies, such as the IHO Digital Bathymetric Data Center for Digital Bathymetry (IHO-DCDB) and crowdsourced bathymetric data [8], which indirectly become DEM-SRNet training samples. To further boost the DEM-SRNet model’s credibility, we incorporated as many high-resolution ocean datasets as feasible to supplement the data samples, as shown in Fig. 1a, B–G. Please note that we downsampled NASADEM data with the bicubic interpolation method for replacement in terrestrial areas. The gathered HR regional ocean DEM datasets were integrated and updated for the GDEM_2022 ocean areas. Modern multibeam echosounders can obtain higher resolution ocean topography data than satellite altimeter-based technology. Mapping 100-meter resolution ocean topography datasets takes around billions of dollars and 200 ship-years [14]. This technology not only expedites the precise mapping of the seafloor and the generation of global HR bathymetric maps, but also produces such maps at a lower cost than the equipment-based method. DEM-SRNet still achieves good superresolution effectiveness in glacierized locations such as the Arctic Circle, as shown by the worldwide topographic distribution depicted in Fig. 1a. An average improvement in RMSE of 23.75% may be seen in Table 1 from our DEM-SRNet over the bicubic (traditional) interpolation. Land testing regions show that the DEMSRNet model outperforms the existing SR models in terms of RMSE.

Residual-based approaches such as ESRGAN and EDSR perform better in ocean area testing than convolutional networks such as SRCNN and VDSR. As shown in Table 2, the average reduction in MAE was 19.58 m, over 63.99% of absolute error less than 10 m globally. When GDEM_2022 and EBCO_2021 are compared, the most notable differences are the increased spatial detail in GDEM_2022, as shown in Fig. 1b. In some instances, GDEM_2022 terrestrial areas have better visual and detail outputs than their predecessors (part of A1–A4 in Fig. 1b). GDEM_2022 outperforms in coastal areas, reducing coastline jaggies to an absolute minimum (part of D, F, and G in Fig. 1b) (Fig. S1 online). GDEM_2022 can improve bathymetric mapping in marine and Gulf areas by reducing ocean area blur (part of B, C, and E in Fig. 1b).

In summary, this study presents the development of a new 3 arc-second global DEM dataset ‘‘GDEM_2022”. It can meet the knowledge requirements of ocean topographic data in basic science and practical applications, e.g., resource exploration, tsunami hazard assessment, and territorial claims under the Law of the Sea [14]. Similar efforts in urban DEM super-resolution [6,15] and partial ocean area super-resolution [3] have been made, but never on a global scale. The experiments in Table 2 demonstrate that the mean absolute vertical error between GDEM_2022 and HR regional ocean DEM datasets reached 19.58 m, with over 63.99% of the absolute error being less than 10 m. In terms of clarity and detail, GDEM_2022 outperforms GEBCO_2021. Future efforts will concentrate on enhancing the SR model’s capacity to identify and eradicate systematic error information from low-resolution input images. Insufficient samples are still the limitations of our current work. Obtaining more HR ocean sample datasets to improve model generalizability and accuracy is a crucial future topic to address. We anticipate that the GDEM_2022 dataset and the DEM-SRNet model for obtaining HR bathymetric data from around the world will expand the DEM data community and motivate other research groups to accomplish superior outcomes.

发表评论: